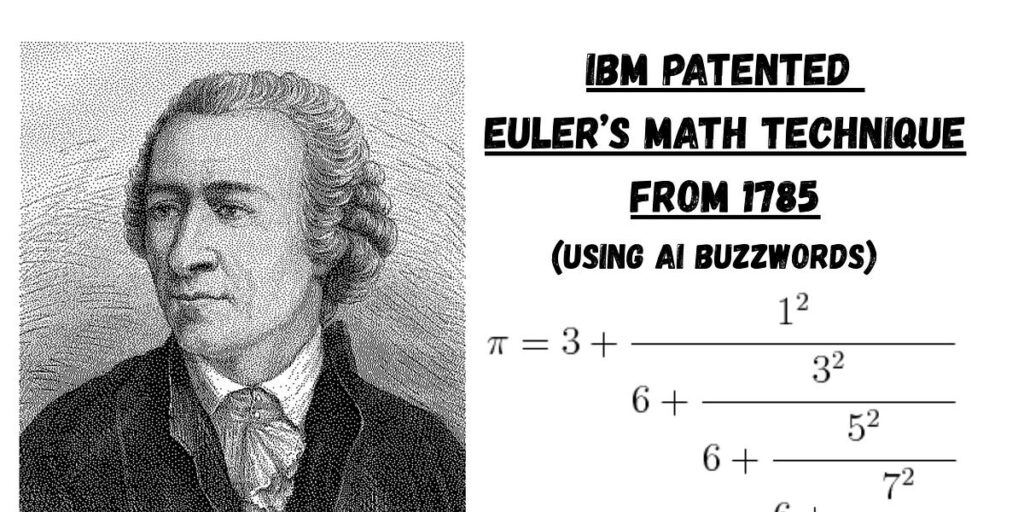

IBM has obtained a patent by applying the term ‘AI Interpretability’ to the concept of Generalized Continued Fractions and their series transformations. LeetArxiv, a platform succeeding Papers With Code after the latter’s closure, offers a complimentary 12-month subscription to Perplexity Pro. Professionals using LeetArxiv for career advancement can use a provided email template to request their employers to cover the subscription cost instead of reading papers, encouraging them to start coding. The patent owned by IBM involves utilizing derivatives to determine the convergents of generalized continued fractions. Interestingly, they implemented a mathematical technique from Gauss, Euler, and Ramanujan using PyTorch and activated backward() on the computation graph. Consequently, IBM can now enforce licensing fees on a mathematical method existing for over two centuries. The code is accessible on Google Colab and GitHub for reference. Additionally, here’s $20 for international money transfers.

A recent paper titled “CoFrNets: Interpretable Neural Architecture Inspired by Continued Fractions” explores the integration of continued fractions into neural network design. The authors assert that continued fractions are universal approximators similar to multilayer perceptrons (MLPs) across 13 pages in the paper. They introduce various terms such as ‘ladders’ for continued fractions, label basic division as ‘The 1/z nonlinearity,’ and present Generalized Continued Fractions as CoFrNets. The paper is filled with what seems like unnecessary complexity while leveraging centuries-old mathematical knowledge for gain.

Simple continued fractions are mathematical representations in the form pn / qn representing the nth convergent. These fractions have been historically used by mathematicians for tasks like approximating Pi or designing gear systems. Generalized continued fractions are defined more succinctly as expressions involving integers or polynomials denoted by a/b.

The authors adopt the term ‘ladder’ as a replacement for continued fraction in their model architecture, concealing that they are essentially reinventing an existing concept. By implementing a continued fraction library in PyTorch and utilizing linear neural network layers with reciprocal non-linearity instead of RELU, they create a model resembling a generalized continued fraction.

When tested on a non-linear waveform dataset, CoFrNet achieves 61% accuracy, falling short of State-of-the-Art (SOTA) standards as expected due to limitations in differentiability of power series inherent in continued fractions. Although the authors managed to optimize gradients using Pytorch’s autodiff engine on their continued fraction library implementation, there are inherent constraints due to the nature of infinite series optimization through differentiation.

Despite continued fractions being well-established concepts predating IBM’s existence and understanding their differentiability properties, the authors filed a patent application based on their work laden with buzzwords in 2022. This move raises concerns since they did not introduce anything novel beyond what Euler had explored in 1785. IBM could potentially pursue legal action against other software providers or individuals using similar techniques based on this patent claim affecting mechanical engineers, mathematicians, educators, numerical analysts, and computation scientists among others.

Let’s unite against patent trolls like IBM to protect open access to mathematical knowledge and innovation.